new web: http://bdml.stanford.edu/pmwiki

TWiki > Haptics Web>StanfordHaptics > SkinStretch>MotionDisplayKAUST (13 May 2010, MarkCutkosky)

Haptics Web>StanfordHaptics > SkinStretch>MotionDisplayKAUST (13 May 2010, MarkCutkosky)

Human Motion Tracking and Haptic Display

started 14July08 MarkCutkosky

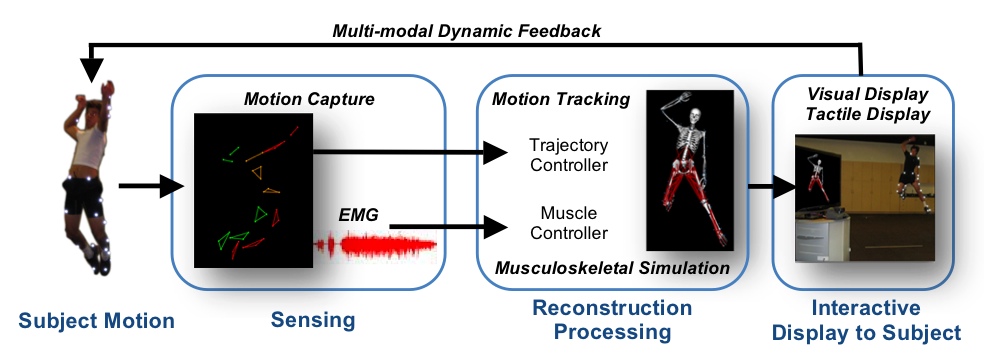

The Human Motion Tracking and Haptic Display project was started in June 2008 under a seed grant from KAUST to the Computer Science Dept. at Stanford. The goal is to create a closed-loop system that can monitor, analyze and provide immediate physical feedback to subjects regarding the motions, forces and muscle activity of their limbs. The applications include rehabilitation (e.g. relearning how to walk after a stroke) and athletics (e.g., perfecting a complex motion sequence). The research draws upon and integrates new results in three areas:

- fast algorithms for computing and matching the dynamics of complex human movements to the measured velocities of optical markers (Khatib lab);

- musculoskeletal simulations that predict the muscle forces and velocities associated with human movements (Delp, Besier labs);

- wearable tactile devices that utilize a combination of skin stretch and vibration to provide users with an enhanced perception of joint movement and/or muscle force (Besier and Cutkosky labs). See the HapticsForGaitRetraining page for continuation of this work.

Updates

- MeetingNotesJuly14 (posted)

- HumanMotionPeople - list of people involved

- HumanMotionFdbk-v4.pdf: v4 of the KAUST proposal

- Link to Julie Steele's knee sleeve device : http://www.csiro.au/files/mediaRelease/mr2001/prkneesleeve.htm

- PAT US 2005/0131387 A1: patent found by Grigori Evreinov relating to haptic stimulators on lower leg (e.g. for balance)

- MultimodalfeedbacktopreventACLinjury.doc: Skinstretch to reduce ACL injury

System Setup

Vicon Motion Capture

We are using a Vicon Motion System (http://www.vicon.com) for motion capture. In order to operate our haptic device in realtime, we need to be able to pipe motion data out in realtime instead of collecting data and then post-processing. To develop this we use Vicon's real-time SDK:- RealTimeSDKmanual.pdf: Vicon's real-time SDK manual

- ViconHardwareReference.pdf: ViconMX Hardware Reference Manual

- ClientCodes.h, ClientCodes.cpp, ExampleClient.cpp: Example code

- TestClient.exe: Example Application (made from example code)

- CG_Beta4.zip: Vicon DLL to enable piping Nexus realtime data into Matlab through TCP

Wearable Tactile Devices

The wearable tactile devices are based on:- Vibration

- Skin stretch PortableSkinStretchDesign PortableSkinStretchSetup

Hardware for Control of Tactile Devices

- Matlab's xPC

- Phidgets

- Arduino

OpenSim

SAI

Ideas, requests, problems regarding TWiki? Send feedback